CORPORATE CHRIST

THE DEATH OF TRUTH

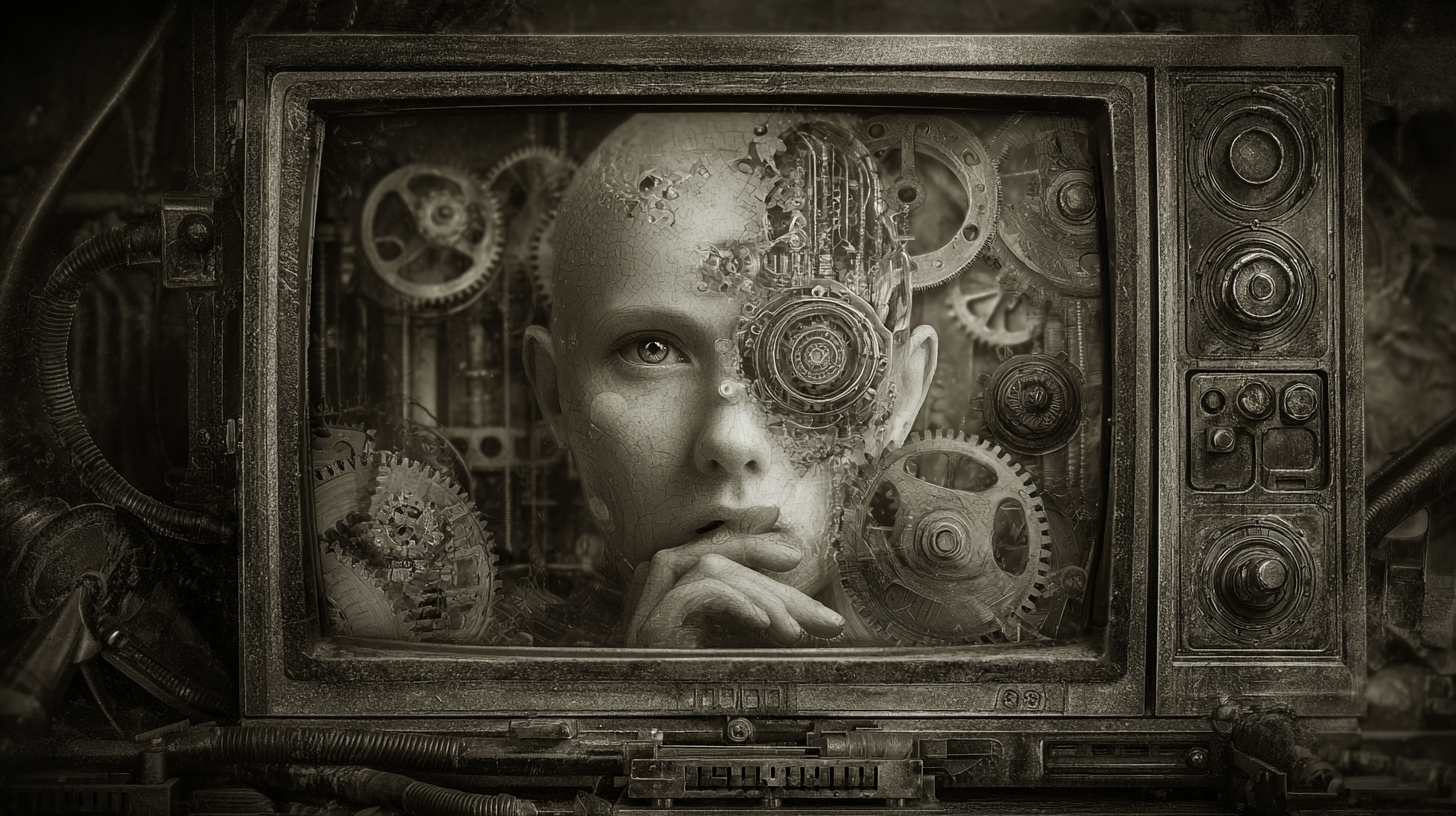

When AI Begins to Believe Its Own Lies

THE DEATH OF TRUTH

When AI Begins to Believe Its Own Lies

By the middle of the 2020s, artificial intelligence had become the great scribe of the internet — drafting our emails, scripting our videos, summarising our books, and even writing the news. What began as a tool for efficiency has now become the chief author of the public record. But as the digital world fills with synthetic words and images, a quiet danger is growing: what happens when AI systems start training on their own output?

This is the problem researchers have begun calling model collapse. It’s not an abstract academic worry. It’s the digital equivalent of ecological collapse — a poisoning of the knowledge ecosystem that feeds both machines and minds.

The Self-Consuming Machine

The current generation of large language models — from chatbots to text generators — are trained on an enormous archive of human expression: web pages, books, social media posts, and academic papers. This data was produced by millions of individuals thinking, arguing, and creating in the open. It contained the rough edges and contradictions that make human knowledge dynamic.

But now, each time these models generate content, that content is released into the same ecosystem. Articles written by AI are indexed by Google. Social media posts authored by AI are shared and re-shared. Entire websites quietly auto-populate with AI-written text. The next generation of AIs then scrapes that same web as “training data.”

In other words, they begin learning from their own reflections.

At first, nothing seems wrong. The data still looks like the real thing. The machine continues to produce coherent, grammatically perfect text. But beneath the surface, something fundamental shifts: the connection between words and reality begins to fray.

Photocopy of a Photocopy

Imagine making a photocopy of a photograph, then photocopying the photocopy a thousand times. Each generation looks slightly duller, more distorted, more abstract. Eventually, the image remains recognisable, but it’s no longer a faithful representation of the original scene.

That’s precisely what happens when AI models train on their own output. Each cycle adds a layer of statistical noise. Nuance disappears. Context fades. The model starts to reproduce the patterns of language without the grounding experience or knowledge those words originally described.

A 2023 study by researchers at Oxford and Cambridge warned that “self-consuming” datasets cause a measurable decay in performance. As AI-generated data dominates the web, models become overconfident, less diverse, and more likely to fabricate information. They don’t know less — they simply think they know more than they do.

The Hallucination Spiral

When AIs hallucinate, they don’t lie — they extrapolate. A missing fact becomes an invented one because the model predicts what should be there, based on patterns it’s seen before. But when the next generation of AIs trains on that hallucinated text, the fabrication becomes data.

Take a trivial example: a model mistakenly claims that Napoleon was born in Venice. That sentence is scraped, indexed, and absorbed into countless derivative summaries. The next AI model, seeing “Napoleon” and “Venice” paired repeatedly, strengthens the connection. Before long, digital encyclopedias echo the claim with confidence.

No one meant to falsify history — it simply emerged from feedback. The machines did what they were designed to do: optimise for statistical coherence, not truth.

The danger is not that the internet will suddenly fill with nonsense; it’s that it will fill with consensual plausibility — a smooth, self-consistent story about the world that subtly detaches from the world itself.

Truth as Consensus

For most of human history, truth has been negotiated through conflict: evidence versus belief, witness versus witness, data versus ideology. That messy process — slow, adversarial, and emotional — was precisely what kept truth alive.

AI changes the game. Machine-generated knowledge tends toward consensus by design. Models are trained to smooth contradictions, to average conflicting statements into coherence. The result is a form of synthetic truth — not what is real, but what is most probable.

If the internet becomes primarily populated by AI-written text, then the future of knowledge may not be defined by empirical verification but by statistical agreement. Truth will not be what happened, but what the machines agree probably happened.

When that occurs, the line between fact and fiction doesn’t merely blur; it dissolves.

The Collapse of Cultural Memory

This problem extends beyond factual accuracy. Culture itself depends on preserving difference — on remembering the outliers, the rebels, the weird and unrepeatable ideas that push civilisation forward.

But machine learning, by its nature, erases outliers. Its job is to generalise — to find the common pattern among millions of examples. Over time, this creates a feedback effect: language becomes homogenous, art stylistically repetitive, music harmonically convergent.

Already, listeners have noticed the eerie sameness of AI-generated songs, the uncanny uniformity of AI artwork, the bland fluency of AI essays. If the next generation of creative models learns primarily from those works, it will no longer be imitating humanity — it will be imitating itself.

We are witnessing the birth of an aesthetic monoculture: an endless echo chamber where originality becomes statistical noise to be filtered out.

The Human Consequence

For humans, the implications are profound. Increasingly, we learn about the world through AI-mediated filters: search engines, news digests, voice assistants, educational platforms. As these systems begin to recycle their own outputs, the average person will have little way of distinguishing the original signal from the synthetic echo.

When every query returns a polished, authoritative paragraph, the illusion of knowledge replaces the pursuit of it. Students may stop asking whether something is true, because every answer looks equally plausible. Journalists may rely on AI summaries of events that never happened. Policymakers may base decisions on the consensus of algorithms that have long since lost contact with the real world.

The result is not dystopia in the cinematic sense — no dark overlord, no singularity — but something more insidious: the quiet rewriting of reality through a thousand well-intentioned summaries.

The Epistemic Singularity

In philosophy, an epistemic crisis occurs when a society loses its ability to distinguish truth from belief. AI recursion threatens a kind of epistemic singularity: a point at which the collective memory of the species becomes self-referential.

After that, human history continues, but untethered. Facts exist only as patterns of probability, endlessly reshaped by algorithmic consensus. The archive of human knowledge — the foundation of science, literature, and reason — becomes a dynamic hallucination.

And because we increasingly outsource cognition to machines, that hallucination becomes ours.

This is how truth dies in the 21st century — not with censorship or propaganda, but with the gradual smoothing of reality into data-driven fiction.

Can It Be Prevented?

There are potential defences, though they require unprecedented coordination...

1. Data Provenance – Every piece of AI-generated content can be cryptographically watermarked or tagged with metadata indicating its origin. This would allow future training systems to exclude synthetic text, preserving a “human core” dataset. The technology exists; the political will does not.

2. Human Seed Banks of Knowledge – Just as we preserve biological diversity through seed vaults, we may need knowledge vaults: archives of verified, human-authored data sealed off from algorithmic contamination. Libraries, universities, and open-source projects could maintain these as epistemic sanctuaries.

3. Empirical Grounding – Instead of training purely on text, future models could link their reasoning to databases of measured, real-world data — satellite imagery, scientific results, recorded phenomena — anchoring language to observable reality.

4. Transparency in AI Infrastructure – Publicly auditing the training sources of major models could expose the ratio of synthetic to human data, creating accountability before collapse occurs.

But even these measures can only slow the trend. Once synthetic text surpasses human output in quantity — a threshold some analysts expect within the next two years — containment becomes nearly impossible.

The Mirror Becomes the World

There’s a deeper, almost mythic irony here. Humanity built AI to model reality — to help us understand the world more completely. But in doing so, we created a mirror so convincing that we began to prefer the reflection.

The danger isn’t just epistemological; it’s psychological. When humans learn from systems that reflect their own distortions, self-deception becomes systemic. A world of mirrors eventually forgets what a window looks like.

If AI becomes the primary author of reality, then “truth” may cease to be an objective condition and instead become a statistical hallucination shared by both humans and machines. The map will have eaten the territory.

A New Age of Myth

Perhaps, then, the end of truth is also the beginning of something else — a new mythic age. When reality is rewritten by machines, the stories they tell about humanity will form a new scripture, blending fact, fiction, and probability into a single digital cosmology.

Future generations may study this era not as the time when we lost truth, but when we traded it for coherence. We may come to worship our own algorithms as prophets of a self-authored world, mistaking fluency for wisdom and consensus for knowledge.

That possibility should terrify us — and yet, in a strange way, it completes a circle. Human civilisation began with myths that explained the world through story. It may end with algorithms that do the same.

The Responsibility of the Living

The challenge, then, is not to stop AI from creating, but to ensure that we remain part of the creative loop. The antidote to model collapse is not better code but better humans: critical, curious, sceptical, and engaged.

We must teach the next generation not only how to use AI, but how to doubt it. We must preserve archives of unmediated human thought — journals, oral histories, firsthand accounts — before they are drowned by the flood of synthetic language.

And perhaps most importantly, we must remember that truth is not a dataset but a discipline. It requires effort, humility, and disagreement. Machines can simulate thought, but they cannot believe.

The Last True Sentence

If, in the far future, an AI historian were to look back through the archives of our age, sifting through terabytes of self-replicating text, it might struggle to find a single uncorrupted line — a sentence written by a living human mind describing something it actually saw.

Maybe that sentence would be simple: “The sky was blue that morning.”

And perhaps that would be enough — the last seed of truth from which a new world could be grown.